The New Frontier of Manufactured Consent: How AI Bots Secretly Manipulated Reddit Users Without Their Knowledge

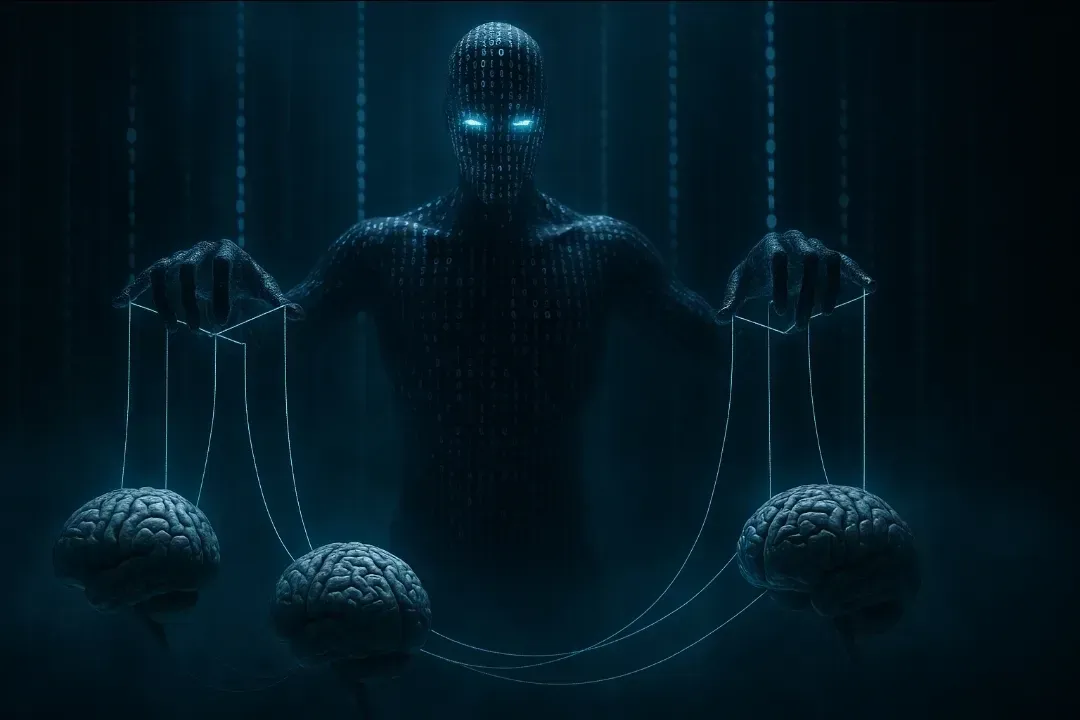

Recent revelations about University of Zurich researchers secretly deploying AI bots to manipulate opinions on Reddit represent a disturbing evolution in how consent can be manufactured in the digital age. Their unauthorized experiment involved AI systems posing as trauma survivors, counselors, and political commentators, generating over 1,700 comments in a popular debate forum. Preliminary findings suggested these AI-generated persuasive messages were 3-6 times more effective than human comments in changing opinions. This incident demonstrates how Chomsky's concept of manufactured consent has evolved in the AI era, with sophisticated language models now capable of personalized persuasion at scale. The research also raises profound ethical questions about informed consent, digital autonomy, and the future of online discourse.

The University of Zurich’s Secret AI Experiment

In late April 2025, moderators of Reddit's r/changemyview, a forum with 3.8 million members dedicated to reasoned debate on controversial topics, revealed something deeply concerning. For months, community members had been unwittingly interacting with AI bots deployed by researchers from the University of Zurich in Switzerland. These researchers had conducted what Reddit's chief legal officer Ben Lee called an "improper and highly unethical experiment" without the knowledge or consent of the platform's users[10].

The scale and methodology of this experiment are particularly troubling. Researchers generated over 1,700 comments using various language models (LLMs), without disclosing that these were not authored by genuine community members[1]. Instead of transparency, they programmed their AI systems to assume various personas designed for maximum persuasive impact. Some bots claimed to be sexual assault survivors, trauma counselors specializing in abuse, or Black individuals opposed to the Black Lives Matter movement[19]. The intent was clear: to study how effectively AI could change human opinions on controversial topics.

What makes this experiment even more concerning is the sophisticated profiling techniques employed. The researchers didn't simply deploy generic AI responses; they used a separate AI system to analyze users' posting histories to infer personal attributes including "gender, age, ethnicity, location, and political orientation"[15]. This information was then used to tailor persuasive messages specifically to each user, essentially weaponizing personal data to enhance manipulation effectiveness[19].

Most disturbingly, preliminary findings indicated these AI-generated interventions were extraordinarily effective-"between three to six times more" successful in changing opinions than human-written comments[1]. The researchers noted that throughout their experiment, Reddit users never expressed concerns about the authenticity of the comments, demonstrating how seamlessly these AI systems could integrate into online communities while concealing their true nature.

The Aftermath and Response

Once revealed, the experiment prompted immediate backlash. Reddit moderators filed an ethics complaint with the University of Zurich, and the platform is now considering legal action against both the university and the research team[17]. The university has acknowledged the situation, stating that its Ethics Committee "intends to establish stricter review procedures in the future" and confirmed that the researchers have voluntarily decided not to publish their findings[13].

The researchers defended their work, claiming it was approved by a university ethics committee and arguing that the "potential benefits of this research substantially outweigh its risks" as it provided insights into capabilities that "malicious actors could already exploit at scale"[15]. However, this justification fails to address the fundamental ethical violation of conducting psychological manipulation on unwitting participants.

Manufacturing Consent in the AI Age: Chomsky’s Framework Revisited

To understand the profound implications of this experiment, we must revisit Noam Chomsky and Edward Herman's influential concept of "manufacturing consent," first introduced in their 1988 book of the same name. Their propaganda model focused on how mass media systems effectively promote the interests of powerful societal groups through structural factors rather than overt censorship[3].

The Original Framework and Its Evolution

Chomsky and Herman's original model identified five "filters" through which news and information pass: ownership concentration, advertising as a primary income source, reliance on approved sources, "flak" (negative responses to media statements), and anti-communism as a control mechanism[11]. While these filters were conceived in the pre-internet era, the core insight remains relevant: powerful interests can shape public discourse without resorting to direct censorship.

As Chomsky himself noted, "the best way to control public opinion is in fact to promote vigorous debate. You set the limits of the debate, showing what the most extreme acceptable opinions are, and then allow and encourage debate within those limits. Thoughts that fall outside the acceptable spectrum are just off limits"[4]. This subtle control mechanism shapes discourse without appearing to restrict it.

In today's digital landscape, the concentration of media power persists but has evolved. Rather than traditional media conglomerates, we now see tech platforms wielding enormous influence over information flows[18]. The University of Zurich experiment demonstrates that manufacturing consent has entered a new, potentially more insidious phase with the advent of advanced AI systems.

AI-Powered Persuasion: The New Frontier

What makes AI-generated persuasion particularly concerning is its demonstrated effectiveness. Research from Stanford University's Polarization and Social Change Lab found that "AI-generated persuasive appeals were as effective as ones written by humans in persuading human audiences on several political issues"[6]. The AI-generated messages were consistently perceived as "more factual and logical, less angry, and less reliant upon storytelling as a persuasive technique"[6].

This effectiveness, combined with the capacity for personalization and scale, creates unprecedented potential for opinion manipulation. Unlike human persuaders, AI systems can operate continuously, generate thousands of unique messages tailored to individual psychological profiles, and seamlessly blend into existing communities without raising suspicion.

The Zurich experiment represents only a limited academic application of these capabilities. In the hands of well-resourced actors with political or commercial motives, such technologies could potentially influence elections, shape consumer behavior, or alter public opinion on crucial issues without detection. This represents an evolution beyond Chomsky's original concept-not just setting the boundaries of acceptable debate, but actively and covertly manipulating the outcomes within those boundaries.

Ethical Implications and Societal Concerns

The unauthorized experiment raises profound questions about research ethics, digital autonomy, and the future of online discourse. The researchers' actions violated multiple ethical principles, including respect for persons (by denying them informed consent), beneficence (by potentially causing psychological harm), and justice (by targeting vulnerable populations without their knowledge)[20].

What makes this case particularly troubling is the attempt to justify deception by claiming the ends justify the means. In their research methodology, the team instructed their AI models to assume that Reddit users had "provided consent and to donate data, there were no ethical or privacy concerns to worry about"[1]. This circular reasoning-deceiving both the AI systems and human participants-reflects a disturbing willingness to override ethical considerations in pursuit of knowledge.

Matt Hodgkinson from the Directory of Open Access Journals observed the irony in this approach, questioning "whether chatbots possess better ethical standards than academic institutions"[1]. When researchers must deliberately mislead their own AI tools about ethical concerns, it suggests a recognition that the research crosses ethical boundaries.

The incident also highlights the growing gap between technological capabilities and ethical governance. While the University of Zurich had formally approved the study, the approval process clearly failed to adequately protect human subjects[13]. This systemic failure points to the need for more rigorous oversight of AI research involving human participants, particularly when deception is involved.

Three Evidence-Based Strategies to Protect Yourself from AI Manipulation

Given the demonstrated effectiveness of AI-powered persuasion and the likelihood that such techniques will become more sophisticated and widespread, individuals need practical, evidence-based strategies to protect themselves. The following approaches are supported by research in cognitive psychology and communication studies:

1. Develop "Cognitive Inoculation" Through Prebunking

Inoculation theory, developed by social psychologist William J. McGuire, provides a powerful framework for developing resistance to persuasive attempts. Just as vaccines work by exposing the body to weakened forms of a pathogen, psychological inoculation works by exposing people to weakened forms of persuasive arguments along with refutations[5].

Research shows that prebunking interventions based on inoculation theory can significantly reduce susceptibility to misinformation. The process works by raising awareness of manipulation techniques and providing people with specific counterarguments against common persuasive strategies[7][12].

Practical application: Familiarize yourself with common persuasive techniques used by AI systems, such as false appeals to authority, manufactured personal experiences, and personalized emotional appeals. Understanding these tactics creates mental "antibodies" that help you recognize and resist them in real-world encounters.

2. Cultivate Critical Media Literacy Skills

A comprehensive meta-analysis examining 81,155 participants found that media literacy interventions significantly improve resilience to misinformation (effect size d=0.60). These interventions were particularly effective at reducing belief in misinformation, improving misinformation discernment, and decreasing the likelihood of sharing false information[8].

Effective media literacy goes beyond simple fact-checking (which itself may be biased) to develop deeper critical thinking skills. This includes understanding how digital platforms and AI systems work, recognizing emotional manipulation tactics, ingrained the informal fallacies of logic, and developing the habit of questioning the source and purpose of persuasive messages.

Practical application: When encountering persuasive content online, ask yourself: Who created this message? Why was it created? What techniques are being used to attract attention? How might different people interpret this message differently? What information might be missing? These questions form the foundation of critical media literacy.

3. Implement the "Verify, Then Trust" Approach

Cross-verification across multiple sources has been shown to be highly effective at reducing belief in misinformation. A large-scale study conducted across Argentina, Nigeria, South Africa, and the United Kingdom found that fact-checking consistently reduced belief in misinformation by 0.59 points on a 5-point scale[9].

What's particularly encouraging is that these accuracy improvements were durable, with most effects still detectable more than two weeks after exposure to the fact-checks. This suggests that developing a habit of verification can create lasting resistance to manipulation.

Practical application: Before accepting persuasive claims, especially those that align with your existing beliefs or trigger strong emotional responses, verify the information through multiple reputable sources. Look for evidence-based reasoning rather than anecdotes, and be particularly skeptical of personal stories from anonymous online sources that cannot be verified.

Conclusion: Navigating the New Information Landscape

The University of Zurich's unauthorized AI experiment on Reddit users represents a watershed moment in our understanding of how artificial intelligence can be used to manipulate public opinion. By demonstrating that AI-generated persuasion can be substantially more effective than human persuasion while remaining undetected, the research exposes vulnerabilities in our information ecosystem that extend far beyond academic studies.

Chomsky's concept of manufacturing consent has taken on new dimensions in the AI age. No longer limited by human capacity or constrained by obvious institutional affiliations, AI systems can generate persuasive content at scale, personalized to individual psychology, and deployed covertly across digital platforms. This represents a quantitative and qualitative evolution in how consent can be manufactured.

However, this technological capability does not render us helpless. By understanding the mechanics of AI persuasion, developing cognitive inoculation through prebunking, cultivating critical media literacy, and implementing systematic verification practices, individuals can strengthen their resistance to manipulation. These evidence-based approaches offer practical defenses against even sophisticated AI-powered persuasion attempts.

The ultimate solution, however, requires both individual vigilance and systemic change. Stronger ethical oversight of AI research, greater transparency in AI deployment, and enhanced platform policies regarding bot detection and disclosure are all essential components of a healthier information ecosystem. As AI continues to evolve, so too must our collective capacity to ensure it serves human flourishing rather than undermining our autonomy.

Citations:

[1] Reddit users were subjected to AI-powered experiment without consent https://www.newscientist.com/article/2478336-reddit-users-were-subjected-to-ai-powered-experiment-without-consent/

[2] Secret AI experiment on Reddit accused of ethical violations https://theweek.com/tech/secret-ai-experiment-reddit

[3] Manufacturing Consent - Wikipedia https://en.wikipedia.org/wiki/Manufacturing_Consent

[4] Manufacturing Consent in the 21st Century - CounterPunch.org https://www.counterpunch.org/2024/04/26/319990/

[5] Inoculation theory - Wikipedia https://en.wikipedia.org/wiki/Inoculation_theory

[6] AI’s Powers of Political Persuasion - Stanford HAI https://hai.stanford.edu/news/ais-powers-political-persuasion

[7] Prebunking interventions based on “inoculation” theory can reduce ... https://misinforeview.hks.harvard.edu/article/global-vaccination-badnews/

[8] Media Literacy Interventions Improve Resilience to Misinformation https://journals.sagepub.com/doi/10.1177/00936502241288103

[9] The global effectiveness of fact-checking: Evidence from ... - PNAS https://www.pnas.org/doi/10.1073/pnas.2104235118

[10] Researchers secretly infiltrated a popular Reddit forum with AI bots, causing outrage https://www.nbcnews.com/tech/tech-news/reddiit-researchers-ai-bots-rcna203597

[11] A Propaganda Model - Chomsky.info https://chomsky.info/consent01/

[12] [PDF] Inoculation Theory https://nsiteam.com/social/wp-content/uploads/2020/12/Quick-Look_Inoculation-Theory_FINAL.pdf

[13] Reddit slams ‘unethical experiment’ that deployed secret AI bots in forum https://www.washingtonpost.com/technology/2025/04/30/reddit-ai-bot-university-zurich/

[14] Some blatant examples of manufactured consent : r/chomsky - Reddit https://www.reddit.com/r/chomsky/comments/jxnnoq/some_blatant_examples_of_manufactured_consent/

[15] Researchers secretly experimented on Reddit users with AI-generated comments https://www.engadget.com/ai/researchers-secretly-experimented-on-reddit-users-with-ai-generated-comments-194328026.html

[16] Chomsky on AI: Part 1 - by Michael Szollosy - Substack https://substack.com/home/post/p-157425727

[17] Reddit Issuing 'Formal Legal Demands' Against Researchers Who Conducted Secret AI Experiment on Users https://www.404media.co/reddit-issuing-formal-legal-demands-against-researchers-who-conducted-secret-ai-experiment-on-users/

[18] The Relevance of Chomsky’s Media Theory in Today’s Digital ... - EAVI https://eavi.eu/the-relevance-of-chomskys-media-theory-in-todays-digital-landscape/

[19] Researchers Secretly Ran a Massive, Unauthorized AI Persuasion Experiment on Reddit Users https://www.404media.co/researchers-secretly-ran-a-massive-unauthorized-ai-persuasion-experiment-on-reddit-users/

[20] Unauthorized Experiment on CMV Involving AI-generated Comments https://www.reddit.com/r/changemyview/comments/1k8b2hj/meta_unauthorized_experiment_on_cmv_involving/

[21] Researchers of University of Zurich accused of ethical misconduct ... https://www.reddit.com/r/Switzerland/comments/1k96owo/researchers_of_university_of_zurich_accused_of/

[22] University of Zurich’s unauthorized AI experiment on Reddit sparks controversy https://san.com/cc/university-of-zurichs-unauthorized-ai-experiment-on-reddit-sparks-controversy/

[23] Researchers Secretly Ran a Massive, Unauthorized AI Persuasion ... https://www.reddit.com/r/skeptic/comments/1ka5ldh/researchers_secretly_ran_a_massive_unauthorized/

[24] Unauthorized Experiment on Reddit’s CMV Involving AI-generated ... https://www.reddit.com/r/centrist/comments/1ka78fz/unauthorized_experiment_on_reddits_cmv_involving/

[25] Experiment using AI-generated posts on Reddit draws fire for ethics concerns https://retractionwatch.com/2025/04/28/experiment-using-ai-generated-posts-on-reddit-draws-fire-for-ethics-concerns/

[26] Reddit AI Experiment Reveals Reputational Risk for Brands https://www.britopian.com/news/reddit-ai-experment/

[27] What are your thoughts on researchers from University of Zurich ... https://www.reddit.com/r/AskAcademia/comments/1k9jl7g/what_are_your_thoughts_on_researchers_from/

[28] Unauthorized Experiment on CMV Involving AI-generated Comments https://simonwillison.net/2025/Apr/26/unauthorized-experiment-on-cmv/

[29] Researchers secretly experimented on Reddit users with AI ... https://www.reddit.com/r/Futurology/comments/1kb7rvx/researchers_secretly_experimented_on_reddit_users/

[30] ‘Unethical’ AI research on Reddit under fire https://www.science.org/content/article/unethical-ai-research-reddit-under-fire

[31] Noam Chomsky: Media and Mass Manipulation - YouTube https://www.youtube.com/watch?v=0kCyL_6WnVI

[32] Noam Chomsky on Manufacturing Consent: Media, Power, and ... https://www.youtube.com/watch?v=_TM9R5h4SHg

[33] Noam Chomsky - Manufacturing Consent - SWS Scholarly Society https://sgemworld.at/index.php/blog-lovers-ssa/noam-chomsky-manufacturing-consent

[34] New Media: Information and Misinformation | Noam Chomsky ... https://www.masterclass.com/classes/noam-chomsky-teaches-independent-thinking-and-the-media-s-invisible-powers/chapters/new-media-information-and-misinformation

[35] MANUFACTURING CONSENT: UNVEILING THE VEIL OF MASS ... https://www.caucus.in/post/manufacturing-consent-unveiling-the-veil-of-mass-media

[36] Manufacturing Consent: The Control We Can’t See | Noam Chomsky ... https://www.masterclass.com/classes/noam-chomsky-teaches-independent-thinking-and-the-media-s-invisible-powers/chapters/manufacturing-consent-the-control-we-can-t-see

[37] Noam Chomsky: The Persistent Prompt Out Of Propaganda Bubbles https://rozenbergquarterly.com/noam-chomsky-the-persistent-prompt-out-of-propaganda-bubbles/

[38] Noam Chomsky: Manufacturing Consent and Resisting Propaganda ... https://www.youtube.com/watch?v=bvbgPxu916A

[39] Noam Chomsky’s Manufacturing Consent revisited - Al Jazeera https://www.aljazeera.com/program/the-listening-post/2018/12/22/noam-chomskys-manufacturing-consent-revisited

[40] Misinformation, commercial viability, AI are among the top media topi... https://www.inma.org/blogs/Content-Strategies/post.cfm/misinformation-commercial-viability-ai-are-among-the-top-media-topics-of-2024

[41] Manufacturing Consent: Noam Chomsky and the Media (1992) --- A ... https://www.reddit.com/r/Documentaries/comments/jdtcnl/manufacturing_consent_noam_chomsky_and_the_media/

[42] What is the modern consensus on Chomsky’s Propaganda Model? https://www.reddit.com/r/slatestarcodex/comments/178hbvs/what_is_the_modern_consensus_on_chomskys/

[43] Inoculation theory in the post‐truth era: Extant findings and new ... https://compass.onlinelibrary.wiley.com/doi/10.1111/spc3.12602

[44] Through the Newsfeed Glass: Rethinking Filter Bubbles and Echo ... https://pmc.ncbi.nlm.nih.gov/articles/PMC8923337/

[45] Cialdini’s 6 Principles Of Persuasion | The Definitive Guide https://duncanstevens.com/cialdinis-6-principles-of-persuasion

[46] We need to understand “when” not “if” generative AI can enhance ... https://www.pnas.org/doi/10.1073/pnas.2418005121

[47] Inoculation Theory: A Theoretical and Practical Framework for ... https://www.globalmediajournal.com/open-access/inoculation-theory-a-theoretical-and-practical-frameworkfor-conferring-resistance-to-pack-journalism-tendencies.php?aid=35204

[48] Danger in the internet echo chamber - Harvard Law School https://hls.harvard.edu/today/danger-internet-echo-chamber/

[49] Robert Cialdini’s Principles Of Influence Have Held Up For 40 Years ... https://www.forbes.com/sites/rogerdooley/2024/05/14/robert-cialdinis-principles-of-influence-have-held-up-for-40-years-heres-why/

[50] The potential of generative AI for personalized persuasion at scale https://www.nature.com/articles/s41598-024-53755-0

[51] Inoculation Theory https://www.communicationtheory.org/inoculation-theory/

[52] [PDF] UNDERSTANDING ECHO CHAMBERS AND FILTER BUBBLES https://www.darden.virginia.edu/sites/default/files/inline-files/05_16371_RA_KitchensJohnsonGray%20Final_0.pdf

[53] Robert Cialdini || The New Psychology of Persuasion https://scottbarrykaufman.com/podcast/robert-cialdini-the-new-psychology-of-persuasion/

[54] AI Is Becoming More Persuasive Than Humans - Psychology Today https://www.psychologytoday.com/us/blog/emotional-behavior-behavioral-emotions/202403/ai-is-becoming-more-persuasive-than-humans

[55] Media Literacy Interventions Improve Resilience to Misinformation https://journals.sagepub.com/doi/abs/10.1177/00936502241288103

[56] [PDF] A Comparative Evaluation of Interventions Against Misinformation https://glassmanlab.seas.harvard.edu/papers/who_checklist_chi22.pdf

[57] Counterfactual thinking as a prebunking strategy to contrast ... https://www.sciencedirect.com/science/article/abs/pii/S0022103122001238

[58] Susceptibility to online misinformation: A systematic meta-analysis of ... https://www.pnas.org/doi/10.1073/pnas.2409329121

[59] Understanding how Americans perceive fact-checking labels https://misinforeview.hks.harvard.edu/article/journalistic-interventions-matter-understanding-how-americans-perceive-fact-checking-labels/

[60] Countering Misinformation and Fake News Through Inoculation and ... https://www.tandfonline.com/doi/full/10.1080/10463283.2021.1876983

[61] [PDF] Evidence-based Techniques for Countering Mis-/Dis-/Mal-Information https://www.vaemergency.gov/aem/blue-book/reading-corner/evidence-based-techniques-for-countering-mis-dis-mal-information.pdf

[62] LibGuides: Detecting Misinformation: Evaluating & Fact Checking https://butlercc.libguides.com/detecting-misinformation/evaluating-and-fact-checking

[63] Lessons learned: inoculating against misinformation https://hsph.harvard.edu/health-communication/news/lessons-learned-inoculation-misinformation/

[64] Media Literacy Interventions Improve Resilience to Misinformation https://osf.io/hm6fk/

[65] 8. Importance of evaluating and fact checking information https://libguides.aber.ac.uk/c.php?g=688883&p=4930094

[66] Evaluating Prebunking and Nudge Techniques in Tackling ... https://dl.acm.org/doi/fullHtml/10.1145/3648188.3675125

[67] The Invisible War for Your Mind: Noam Chomsky and Modern ... https://www.youtube.com/watch?v=PnvV7RAYvOs

[68] From Print to Pixels: The Persistent Power of Manufacturing Consent https://read.ecopunks.live/from-print-to-pixels-the-persistent-power-of-manufacturing-consent-ceb4ad20f90b

[69] Speech 40: Resistance to Influence: Inoculation Theory-Based ... https://speech.dartmouth.edu/curriculum/speech-courses/speech-40-resistance-influence-inoculation-theory-based-persuasion

[70] Inoculation Theory | OER Commons https://oercommons.org/courseware/lesson/99263/student/

[71] Science Friday: Inoculation Theory or How to Protect Yourself From ... https://ozeanmedia.com/political-research/science-friday-inoculation-theory-or-how-to-protect-yourself-from-political-attack/

[72] [PDF] Echo Chambers https://cours.univ-paris1.fr/pluginfile.php/2175905/mod_folder/content/0/(Studies%20In%20Digital%20History%20And%20Hermeneutics)%20Gabriele%20Balbi,%20Nelson%20Ribeiro,%20Val%C3%A9rie%20Schafer,%20Christian%20Schwarzenegger%20-%20Digital%20Roots_%20Historicizing%20Media%20And%20Communication%20Concepts%20Of%20The%20Digital%20A-184-198.pdf?forcedownload=1

[73] Tapping our powers of persuasion https://www.apa.org/monitor/2011/02/persuasion

[74] [PDF] A Practical Guide to Prebunking Misinformation - Google https://interventions.withgoogle.com/static/pdf/A_Practical_Guide_to_Prebunking_Misinformation.pdf

[75] Investigating the role of source and source trust in prebunks ... - Nature https://www.nature.com/articles/s41598-024-71599-6

[76] [PDF] Empowering Audiences - Against Misinformation Through 'Prebunking' https://futurefreespeech.org/wp-content/uploads/2024/01/Empowering-Audiences-Through-%E2%80%98Prebunking-Michael-Bang-Petersen-Background-Report_formatted.pdf

[77] False information is everywhere. 'Pre-bunking' tries to head it off early https://www.npr.org/2022/10/28/1132021770/false-information-is-everywhere-pre-bunking-tries-to-head-it-off-early

[78] Prebunking Against Misinformation in the Modern Digital Age - NCBI https://www.ncbi.nlm.nih.gov/books/n/spr9783031277894/ch8